Running Cryosparc v3.0.1 on RHEL7.9 with CUDA 11.2 with 4 NVIDIA GeForce GTX 1080 graphics cards.

After launching a patch motion correction it seems to run smoothly and successfully aligns a number of frames before crashing with the following error:

File "/home/bio21em1/cryosparc3/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 1722, in run_with_except_hook run_old(*args, **kw)

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/threading.py", line 870, in run self._target(*self._args, **self._kwargs)

File "/home/bio21em1/cryosparc3/cryosparc_worker/cryosparc_compute/jobs/pipeline.py", line 164, in thread_work work = processor.process(item)

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/run_patch.py", line 190, in cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/run_patch.py", line 193, in cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/run_patch.py", line 195, in cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/patchmotion.py", line 251, in cryosparc_compute.jobs.motioncorrection.patchmotion.unbend_motion_correction

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/patchmotion.py", line 423, in cryosparc_compute.jobs.motioncorrection.patchmotion.unbend_motion_correction

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/patchmotion.py", line 410, in cryosparc_compute.jobs.motioncorrection.patchmotion.unbend_motion_correction.get_framedata

File "cryosparc_worker/cryosparc_compute/engine/newgfourier.py", line 107, in cryosparc_compute.engine.newgfourier.do_fft_plan_inplace

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/skcuda/cufft.py", line 346, in cufftExecR2C cufftCheckStatus(status)

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/skcuda/cufft.py", line 117, in cufftCheckStatus raise e skcuda.cufft.cufftInvalidPlan

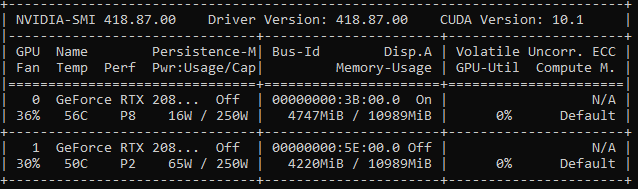

This crash seems to happen randomly (ie. on a different image within the dataset each time) and doesn’t seem to be related to GPU memory or the number of GPUs I include in the calculation. The Cryosparc job log is as follows:

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/queues.py", line 242, in _feed

send_bytes(obj)

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/bio21em1/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/connection.py", line 404, in _send_bytes

self._send(header + buf)

Which seems to be more related to the job failing and then becoming uncontactable. Full frame motion correction runs fine on the GPUs.