Hi again, a week ago I wrote with a problem related to the filament tracer ctf. I was recommended to set it as constant and it worked. However, I got an error related to CUDA that I have been trying to solve for a week, without success.

First, I ran it with my laptop: “13th Gen Intel(R) Core™ i9-13900H 2.60 GHz, 32.0 GB, 1 TB, RTX 4060, Windows 11” using WSL2 with Ubuntu 20.04, but it says CUDA_ERROR_NOT_INITIALIZED. And when running: aaronpalpe@MSIPC:~/cryosparc/cryosparc_master$ nvidia-smi

Wed Feb 12 22:29:07 2025

±---------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.86.16 Driver Version: 572.16 CUDA Version: 12.8 |

|----------------------------------------±-----------------------±---------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory Usage | GPU-Util Compute M. |

| | | MIG M. |

|=======================================+==========================+============|

| 0 NVIDIA GeForce RTX 4060 … On | 00000000:01:00.0 Off | N/A |

| N/A 42C P8 3W / 120W | 182MiB / 8188MiB | 6% Default |

| | | N/A |

±--------------------------------------±------------------------±----------------------+

±---------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |. I saw that it could be because of the CUDA 12.8 version, which being cryosparc 11.5, could be incompatible. I have reinstalled everything several times and nothing. So thinking it could be a WSL2 problem,

The error log:

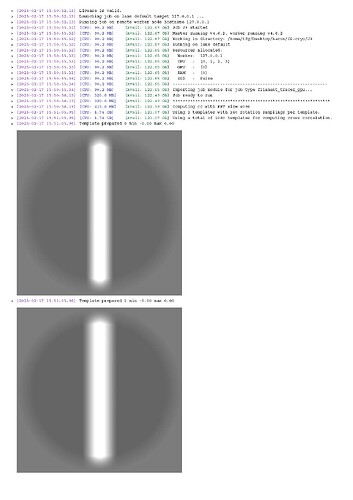

License is valid.

Launching job on lane default target MSIPC. …

Running job on master node hostname MSIPC.

[CPU: 90.3 MB Avail: 13.95 GB]

Job J2 Started

[CPU: 90.3 MB Avail: 13.95 GB]

Master running v4.6.2, worker running v4.6.2

[CPU: 90.3 MB Avail: 13.95 GB]

Working in directory: /home/aaronpalpe/cryosparc13/CS-cryoem/J2

[CPU: 90.3 MB Avail: 13.95 GB]

Running on lane default

[CPU: 90.3 MB Avail: 13.95 GB]

Resources allocated:

[CPU: 90.3 MB Avail: 13.95 GB]

Worker: MSIPC.

[CPU: 90.3 MB Avail: 13.95 GB]

CPU : [0, 1, 2, 3]

[CPU: 90.3 MB Avail: 13.95 GB]

GPU : [0]

[CPU: 90.3 MB Avail: 13.94 GB]

RAM : [0]

[CPU: 90.3 MB Avail: 13.94 GB]

SSD : False

[CPU: 90.3 MB Avail: 13.94 GB]

[CPU: 90.3 MB Avail: 13.94 GB]

Importing job module for job type filament_tracer_gpu…

[CPU: 320.3 MB Avail: 13.79 GB]

Job ready to run

[CPU: 320.3 MB Avail: 13.79 GB]

[CPU: 416.2 MB Avail: 13.70 GB]

Computing cc with FFT size 4096

[CPU: 1.77 GB Avail: 12.35 GB]

Using 3 templates with 360 rotation samplings per template.

[CPU: 1.77 GB Avail: 12.35 GB]

Using a total of 1080 templates for computing cross correlation.

Template prepared 0 min -0.00 max 0.00

Template prepared 1 min -0.00 max 0.00

Template prepared 2 min -0.00 max 0.00

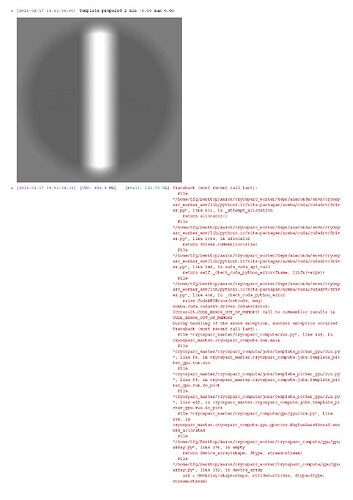

[CPU: 465.9 MB Avail: 13.65 GB]

Traceback (most recent call last): File “cryosparc_master/cryosparc_compute/run.py”, line 129, in cryosparc_master.cryosparc_compute.run.main File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 56, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.run File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 99, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.do_pick File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 347, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.do_pick File “/home/aaronpalpe/cryosparc13/cryosparc_worker/cryosparc_compute/skcuda_internal/fft.py”, line 112, in init self.handle = cryosparc_gpu.gpufft_get_plan( RuntimeError: cuda failure (driver API): cuCtxGetDevice(&device) → CUDA_ERROR_NOT_INITIALIZED initialization error

I tried with a lab computer:

13th Gen intel Core i7-13700KF x 24, 128GB, 13TB, Ubuntu 22.04.5LTS, ASUS System, and when running the command: tfg@tfg:~/Desktop/Aaron/cryosparc_master/bin$ nvidia-smi

Mon Feb 17 15:48:39 2025

±----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.144.03 Driver Version: 550.144.03 CUDA Version: 12.4 |

|----------------------------------------±-----------------------±---------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory Usage | GPU-Util Compute M. |

| | | MIG M. |

|=======================================+==========================+============|

| 0 NVIDIA GeForce RTX 4090 Off | 00000000:01:00.0 On | Off |

| 0% 44C P8 19W / 450W | 370MiB / 24564MiB | 8% Default |

| | | N/A |

±--------------------------------------±------------------------±----------------------+

±---------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 2002 G /usr/lib/xorg/Xorg 116MiB |

| 0 N/A N/A 2160 G /usr/bin/gnome-shell 42MiB |

| 0 N/A N/A 2344 G …3/usr/bin/snapd-desktop-integration 12MiB |

| 0 N/A N/A 25117 G …irefox/5751/usr/lib/firefox/firefox 175MiB |

±---------------------------------------------------------------------------+

The error it gives me here is: CUDA_ERROR_OUT_OF_MEMORY. but I’m only passing a 1024x1024 image with the following parameters:

Micrographs data path:

/home/aaronpalpe/cryosparc/imagen.mrc

Pixel size (A):

34.48

Accelerating Voltage (kV):

300

Spherical Aberration (mm):

2.7

Total exposure dose (e/A^2):

0.668

CTF cte on. And in the filament tracer 1000A diameter, space between filaments 1.5maximum diameter 1200 and minimum 800.

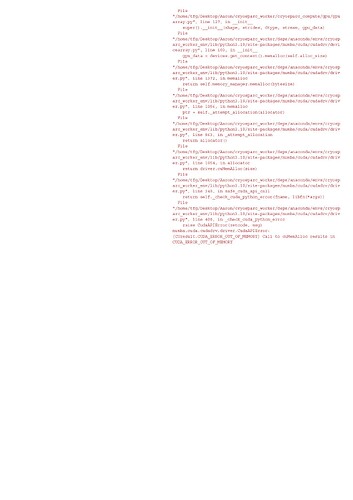

Specifically the error is:

[CPU: 679.3 MB Avail: 121.63 GB]

Traceback (most recent call last):

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 851, in _attempt_allocation

return allocator()

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 1054, in allocator

return driver.cuMemAlloc(size)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 348, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 408, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_OUT_OF_MEMORY] Call to cuMemAlloc results in CUDA_ERROR_OUT_OF_MEMORY

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “cryosparc_master/cryosparc_compute/run.py”, line 129, in cryosparc_master.cryosparc_compute.run.main

File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 56, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.run

File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 99, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.do_pick

File “cryosparc_master/cryosparc_compute/jobs/template_picker_gpu/run.py”, line 422, in cryosparc_master.cryosparc_compute.jobs.template_picker_gpu.run.do_pick

File “cryosparc_master/cryosparc_compute/gpu/gpucore.py”, line 398, in cryosparc_master.cryosparc_compute.gpu.gpucore.EngineBaseThread.ensure_allocated

File “/home/tfg/Desktop/Aaron/cryosparc_worker/cryosparc_compute/gpu/gpuarray.py”, line 376, in empty

return device_array(shape, dtype, stream=stream)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/cryosparc_compute/gpu/gpuarray.py”, line 332, in device_array

arr = GPUArray(shape=shape, strides=strides, dtype=dtype, stream=stream)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/cryosparc_compute/gpu/gpuarray.py”, line 127, in init

super().init(shape, strides, dtype, stream, gpu_data)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/devicearray.py”, line 103, in init

gpu_data = devices.get_context().memalloc(self.alloc_size)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 1372, in memalloc

return self.memory_manager.memalloc(bytesize)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 1056, in memalloc

ptr = self._attempt_allocation(allocator)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 863, in _attempt_allocation

return allocator()

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 1054, in allocator

return driver.cuMemAlloc(size)

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 348, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File “/home/tfg/Desktop/Aaron/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 408, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_OUT_OF_MEMORY] Call to cuMemAlloc results in CUDA_ERROR_OUT_OF_MEMORY

I attach a images of the pdf of the log:

Thank you and sorry for the inconvenience