Hi there,

I had some issues during the Ab-initio reconstruction that suddenly failed but I have no error message. I was digging into the discussion, and I found some other users that have faced a similar issue using RTX3090. Any idea what it could be and how to fix it?

Thanks,

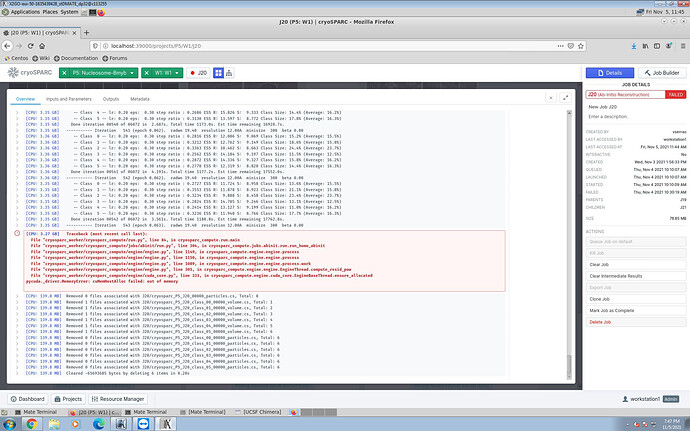

cryosparcm joblog P5 J20

========= sending heartbeat

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

========= sending heartbeat

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

HOST ALLOCATION FUNCTION: using cudrv.pagelocked_empty

**** handle exception rc

set status to failed

========= main process now complete.

========= monitor process now complete.

Waiting for data… (interrupt to abort)

Hi @vitorserrao,

What version of CUDA did you install cryoSPARC with? Can you also paste the output of the file cryosparc_worker/config.sh and the command nvidia-smi?

Hi @stephan,

I used cuda-11.1 and cryosparc v3.2.0. Below are the outputs:

nvidia-smi:

±----------------------------------------------------------------------------+

| NVIDIA-SMI 460.73.01 Driver Version: 460.73.01 CUDA Version: 11.2 |

|-------------------------------±---------------------±---------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce RTX 3090 Off | 00000000:1A:00.0 Off | N/A |

| 0% 42C P8 23W / 350W | 1MiB / 24268MiB | 0% Default |

| | | N/A |

±------------------------------±---------------------±---------------------+

| 1 GeForce RTX 3090 Off | 00000000:68:00.0 Off | N/A |

| 0% 43C P8 21W / 350W | 23MiB / 24267MiB | 0% Default |

| | | N/A |

±------------------------------±---------------------±---------------------+

config.sh:

export CRYOSPARC_LICENSE_ID=“OUR_CORRECT_LICENSE_NUMBER”

export CRYOSPARC_USE_GPU=true

export CRYOSPARC_CUDA_PATH="/usr/local/cuda"

export CRYOSPARC_DEVELOP=false

Thanks for your response. CUDA looks good.

Can you try this job on a different GPU or different dataset? Does the ab-initio job die every time?

It worked well on the T20 tutorial that I used to check the installation. Either cryosparc or cryosparc-live worked nicely and I haven’t had any issues. It’s only dying with this particular dataset (until now). I also tried different initial models (1; 2; 4 and 6) and it’s always dying without an error message.

Hi @vitorserrao,

Thanks for your response. Can you provide screenshots or copy+paste the outputs of the “Overview” tab of the dead Ab-Initio job, that includes where it dies?

Hi @stephan,

Sure, this is the final part of it. Looking at cryosparcm joblog there was no apparent error message, and I just found it after a very long search on the overview (I should have found this earlier, my apologies).

It did run for a while, and then I get this message, however, there is still free space on the cards and disk.

Hi @vitorserrao,

Can you add export CRYOSPARC_NO_PAGELOCK=true to cryosparc_worker/config.sh, then re-run the job?

Hi @stephan,

I already figured it out. We had some memory allocation problems that were already solved.

Thanks anyway!

Vitor

Hi @vitorserrao

How did you solve memory allocation problems??

Hi @nikydna,

It has been awhile, but I think I downsampled the particles and restarted the job.