Dear @wtempel,

Apologies for the delay as I had to liaise with our cluster managers and also doing a bit more troubleshooting.

Here are the outputs:

$ cryosparcm cli “get_job(‘P46’, ‘J242’, ‘job_type’, ‘version’, ‘instance_information’, ‘status’, ‘params_spec’, ‘errors_run’, ‘input_slot_groups’, ‘started_at’)”

{‘_id’: ‘67a550eb0d1cd394b979b233’, ‘errors_run’: , ‘input_slot_groups’: [{‘connections’: [{‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘slots’: [{‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘result_name’: ‘blob’, ‘result_type’: ‘particle.blob’, ‘slot_name’: ‘blob’, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘result_name’: ‘ctf’, ‘result_type’: ‘particle.ctf’, ‘slot_name’: ‘ctf’, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘result_name’: ‘alignments2D’, ‘result_type’: ‘particle.alignments2D’, ‘slot_name’: None, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘result_name’: ‘pick_stats’, ‘result_type’: ‘particle.pick_stats’, ‘slot_name’: None, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J31’, ‘result_name’: ‘location’, ‘result_type’: ‘particle.location’, ‘slot_name’: None, ‘version’: ‘F’}]}], ‘count_max’: inf, ‘count_min’: 1, ‘description’: ‘Particle stacks to use. Multiple stacks will be concatenated.’, ‘name’: ‘particles’, ‘repeat_allowed’: False, ‘slots’: [{‘description’: ‘’, ‘name’: ‘blob’, ‘optional’: False, ‘title’: ‘Particle data blobs’, ‘type’: ‘particle.blob’}, {‘description’: ‘’, ‘name’: ‘ctf’, ‘optional’: False, ‘title’: ‘Particle ctf parameters’, ‘type’: ‘particle.ctf’}, {‘description’: ‘’, ‘name’: ‘alignments3D’, ‘optional’: True, ‘title’: ‘Computed alignments (optional – only used to passthrough half set splits.)’, ‘type’: ‘particle.alignments3D’}, {‘description’: ‘’, ‘name’: ‘filament’, ‘optional’: True, ‘title’: ‘Particle filament info’, ‘type’: ‘particle.filament’}], ‘title’: ‘Particle stacks’, ‘type’: ‘particle’}], ‘instance_information’: {‘CUDA_version’: ‘11.8’, ‘available_memory’: ‘950.83GB’, ‘cpu_model’: ‘Intel(R) Xeon(R) Gold 6230R CPU @ 2.10GHz’, ‘driver_version’: ‘12.2’, ‘gpu_info’: [{‘id’: 0, ‘mem’: 15655829504, ‘name’: ‘Tesla T4’, ‘pcie’: ‘0000:12:00’}], ‘ofd_hard_limit’: 524288, ‘ofd_soft_limit’: 1024, ‘physical_cores’: 52, ‘platform_architecture’: ‘x86_64’, ‘platform_node’: ‘m3t101’, ‘platform_release’: ‘5.14.0-284.25.1.el9_2.x86_64’, ‘platform_version’: ‘#1 SMP PREEMPT_DYNAMIC Wed Aug 2 14:53:30 UTC 2023’, ‘total_memory’: ‘1006.60GB’, ‘used_memory’: ‘50.98GB’}, ‘job_type’: ‘homo_abinit’, ‘params_spec’: {‘abinit_K’: {‘value’: 3}, ‘abinit_class_anneal_beta’: {‘value’: 0.5}, ‘compute_use_ssd’: {‘value’: False}}, ‘project_uid’: ‘P46’, ‘started_at’: ‘Fri, 07 Feb 2025 00:16:59 GMT’, ‘status’: ‘completed’, ‘uid’: ‘J242’, ‘version’: ‘v4.5.3’}

$ cryosparcm cli “get_job(‘P46’, ‘J252’, ‘job_type’, ‘version’, ‘instance_information’, ‘status’, ‘params_spec’, ‘errors_run’, ‘input_slot_groups’, ‘started_at’)”

{‘_id’: ‘67a57c4b0d1cd394b97e36b2’, ‘errors_run’: [{‘message’: ‘’, ‘warning’: False}], ‘input_slot_groups’: [{‘connections’: [{‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘slots’: [{‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘result_name’: ‘blob’, ‘result_type’: ‘particle.blob’, ‘slot_name’: ‘blob’, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘result_name’: ‘ctf’, ‘result_type’: ‘particle.ctf’, ‘slot_name’: ‘ctf’, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘result_name’: ‘alignments2D’, ‘result_type’: ‘particle.alignments2D’, ‘slot_name’: None, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘result_name’: ‘pick_stats’, ‘result_type’: ‘particle.pick_stats’, ‘slot_name’: None, ‘version’: ‘F’}, {‘group_name’: ‘particles_selected’, ‘job_uid’: ‘J132’, ‘result_name’: ‘location’, ‘result_type’: ‘particle.location’, ‘slot_name’: None, ‘version’: ‘F’}]}], ‘count_max’: inf, ‘count_min’: 1, ‘description’: ‘Particle stacks to use. Multiple stacks will be concatenated.’, ‘name’: ‘particles’, ‘repeat_allowed’: False, ‘slots’: [{‘description’: ‘’, ‘name’: ‘blob’, ‘optional’: False, ‘title’: ‘Particle data blobs’, ‘type’: ‘particle.blob’}, {‘description’: ‘’, ‘name’: ‘ctf’, ‘optional’: False, ‘title’: ‘Particle ctf parameters’, ‘type’: ‘particle.ctf’}, {‘description’: ‘’, ‘name’: ‘alignments3D’, ‘optional’: True, ‘title’: ‘Computed alignments (optional – only used to passthrough half set splits.)’, ‘type’: ‘particle.alignments3D’}, {‘description’: ‘’, ‘name’: ‘filament’, ‘optional’: True, ‘title’: ‘Particle filament info’, ‘type’: ‘particle.filament’}], ‘title’: ‘Particle stacks’, ‘type’: ‘particle’}], ‘instance_information’: {‘CUDA_version’: ‘11.8’, ‘available_memory’: ‘953.83GB’, ‘cpu_model’: ‘Intel(R) Xeon(R) Gold 6230R CPU @ 2.10GHz’, ‘driver_version’: ‘12.2’, ‘gpu_info’: [{‘id’: 0, ‘mem’: 15655829504, ‘name’: ‘Tesla T4’, ‘pcie’: ‘0000:12:00’}], ‘ofd_hard_limit’: 524288, ‘ofd_soft_limit’: 1024, ‘physical_cores’: 52, ‘platform_architecture’: ‘x86_64’, ‘platform_node’: ‘m3t101’, ‘platform_release’: ‘5.14.0-284.25.1.el9_2.x86_64’, ‘platform_version’: ‘#1 SMP PREEMPT_DYNAMIC Wed Aug 2 14:53:30 UTC 2023’, ‘total_memory’: ‘1006.60GB’, ‘used_memory’: ‘48.20GB’}, ‘job_type’: ‘homo_abinit’, ‘params_spec’: {‘abinit_K’: {‘value’: 3}, ‘abinit_class_anneal_beta’: {‘value’: 0}, ‘compute_use_ssd’: {‘value’: False}}, ‘project_uid’: ‘P46’, ‘started_at’: ‘Fri, 07 Feb 2025 03:22:02 GMT’, ‘status’: ‘failed’, ‘uid’: ‘J252’, ‘version’: ‘v4.5.3’}

$ cryosparcm joblog P46 J254 | tail -n 40

========= sending heartbeat at 2025-02-14 11:47:55.229980

========= sending heartbeat at 2025-02-14 11:48:05.243461

========= sending heartbeat at 2025-02-14 11:48:15.256215

========= sending heartbeat at 2025-02-14 11:48:25.262897

========= sending heartbeat at 2025-02-14 11:48:35.277559

========= sending heartbeat at 2025-02-14 11:48:45.292215

========= sending heartbeat at 2025-02-14 11:48:55.305206

========= sending heartbeat at 2025-02-14 11:49:05.318924

========= sending heartbeat at 2025-02-14 11:49:15.332998

========= sending heartbeat at 2025-02-14 11:49:25.347194

========= sending heartbeat at 2025-02-14 11:49:35.360204

========= sending heartbeat at 2025-02-14 11:49:45.373212

========= sending heartbeat at 2025-02-14 11:49:55.386790

========= sending heartbeat at 2025-02-14 11:50:05.402358

========= sending heartbeat at 2025-02-14 11:50:15.407858

========= sending heartbeat at 2025-02-14 11:50:25.421297

========= sending heartbeat at 2025-02-14 11:50:35.434206

========= sending heartbeat at 2025-02-14 11:50:45.448348

========= sending heartbeat at 2025-02-14 11:50:55.462205

========= sending heartbeat at 2025-02-14 11:51:05.475556

========= sending heartbeat at 2025-02-14 11:51:15.489803

========= sending heartbeat at 2025-02-14 11:51:25.503334

========= sending heartbeat at 2025-02-14 11:51:35.516202

========= sending heartbeat at 2025-02-14 11:51:45.529204

========= sending heartbeat at 2025-02-14 11:51:55.543954

========= sending heartbeat at 2025-02-14 11:52:05.559207

/apps/cryosparc/cryosparc-general/4.5.3/cryosparc_worker/cryosparc_compute/util/logsumexp.py:41: RuntimeWarning: divide by zero encountered in log

return n.log(wa * n.exp(a - vmax) + wb * n.exp(b - vmax) ) + vmax

:1: UserWarning: Cannot manually free CUDA array; will be freed when garbage collected

========= sending heartbeat at 2025-02-14 11:52:15.573200

========= sending heartbeat at 2025-02-14 11:52:25.586943

2025-02-14 11:52:30,358 del INFO | Deleting plot real-slice-000

2025-02-14 11:52:30,386 del INFO | Deleting plot viewing_dist-000

2025-02-14 11:52:30,400 del INFO | Deleting plot real-slice-001

2025-02-14 11:52:30,426 del INFO | Deleting plot viewing_dist-001

2025-02-14 11:52:30,439 del INFO | Deleting plot real-slice-002

2025-02-14 11:52:30,464 del INFO | Deleting plot viewing_dist-002

2025-02-14 11:52:30,477 del INFO | Deleting plot noise_model

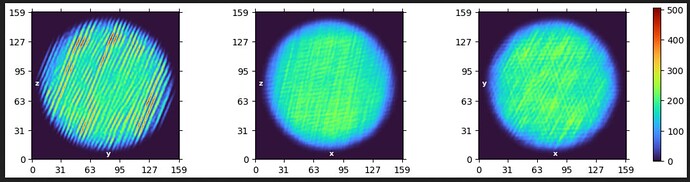

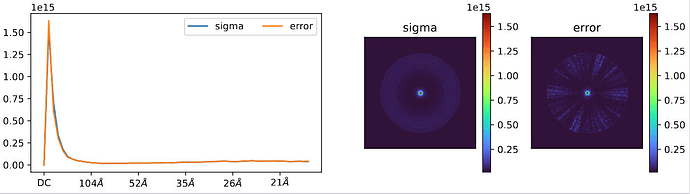

Besides, I was looking into exactly where the issue occurred. It turns out at some point I started having this error with ab-initio when I was tryiing to get rid of junk ptcls through 2D classification. So, as mentioned earlier, to replicate the scenario, I cloned an “unsuccessful” job and linked “successful” ptcls, and the ab-initio succeeded. However, when I ran those “successful” ptcls through 2 more rounds of 2D classifications, and did ab-initio again, it failed. Happy to post any details of these jobs as required. Please advise.