Hi everyone,

I am wondering if you have seen this error message before. I am attempting 3D Flex Refine to resolve some flexible parts of my protein.

So far I have been following the guide, and i’m currently stuck at the Mesh Prep job.

When encoutering this matter, I have tried adjusting the mask threshold, and the map threshold before segmenting it. Finally, I made sure that the map segments cover the entire map. I also confirmed with the mask from the failed job that it covers the entire map as well as the segments. With this, I have avoided the “mask not cover entire segment map” kind of error.

But I have been stuck with the below error. As far as my understanding goes, the segmented map needs to fits nicely with the filtered consensus volume, but I don’;t know how to limit the threshold of the consensus map for this job. Or do I need to do something else?

Would love to hear your inputs! Have a nice day!

Cheers,

Khoa

Traceback (most recent call last):

File "cryosparc_master/cryosparc_compute/run.py", line 115, in cryosparc_master.cryosparc_compute.run.main

File "cryosparc_master/cryosparc_compute/jobs/flex_refine/run_meshprep.py", line 167, in cryosparc_master.cryosparc_compute.jobs.flex_refine.run_meshprep.run

File "cryosparc_master/cryosparc_compute/jobs/flex_refine/tetraseg.py", line 105, in cryosparc_master.cryosparc_compute.jobs.flex_refine.tetraseg.segment_and_fuse_tetra

AssertionError: All voxels in mask should have been assigned to a region but were not.

Hi @lecongmi001! Sorry you’re running into issues during mesh prep!

Did you perform your segmentation on the original, full-size map? The segmentation has the same size as the map, so if you segment the full-size map the resulting .seg file is likely larger than the input to mesh prep, which would be the downsampled map from 3D Flex Data Prep.

Unfortunately, as of right now, the easiest way to resolve this issue is to re-perform segmentation on the downsampled map (from Data Prep). I will add a note to the guide to this effect!

1 Like

Hi @rwaldo ,

No, I performed the segmentation on the Downsampled map from Data prep, like you mentioned. I think a parameter that I need to tweak next is the contour level of the downsampled map before doing segmentation? since the variable region causes a lot of noise, if I perform segmentation on the higher contour level that removes the noise of the flexible region, maybe the segmentation does not cover the noise? Or should I do a guassian on the downsampled map first?

After redoing the segmentation about 5 times, I realized that the issue could be due to how much I contoured the Downsampled map. I need to make it so that the noisy part from the flexible area disappear before segmenting, otherwise it will generate invisible segments that cannot be covered by the mask

Ah, yes, if there is a lot of noise in the original segmentation, it is probably best to low-pass filter the map. Recall that the segments are expanded to fill the entire mask, so they don’t need to perfectly tile the entire mask.

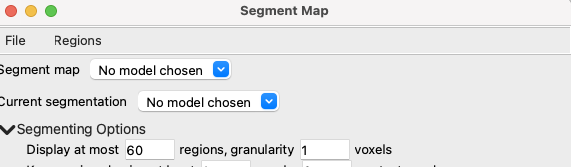

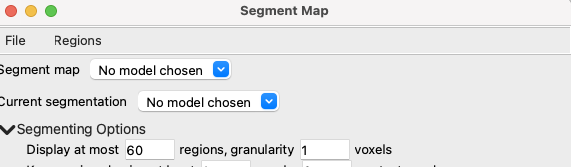

You might also want to increase the “display at most {parameter} regions” parameter. For large proteins, or maps which don’t segment very well, I find that 60 is far too low.