Hello, I’m currently conducting 3D Flex training on H800 . However, the process has been stuck at iteration 7 for the past two days without generating any errors. I’m unsure about the next steps and would greatly appreciate your assistance.

Welcome to the forum @fxminato.

What is H800?

Please can you collect the following information on the worker computer where the job is running and post

- the output of the command

htop - the output of the command

nvidia-smi -L - the output of the command

free -g - the output of the command

cat /sys/kernel/mm/transparent_hugepage/enabled - the output of the command

uname -a

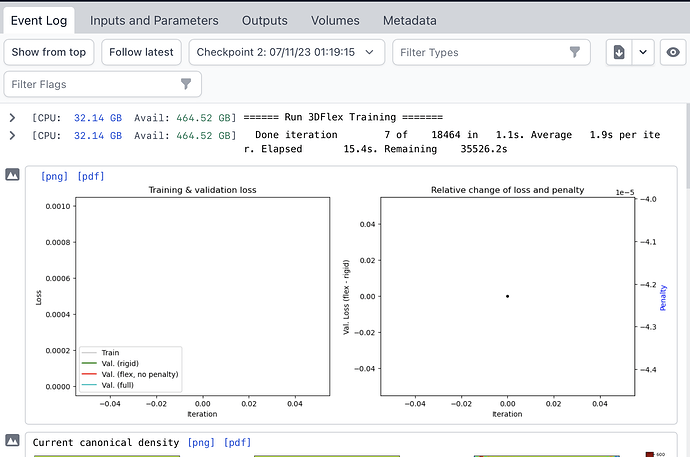

Thank you for your response. H800 refers to the NVIDIA H800 SXM5 . Our 3D-Flex training job ID is P2 J20, and although it may appear to be running smoothly as seen below, it has actually been stuck for more than a day.

bcl@bimsa-SYS-421GE-TNRT:~$ htop

Avg[|||||||||||||||||| 20.6%] Tasks: 148, 1025 thr; 34 running

Mem[|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||13.9G/504G] Load average: 34.37 33.63 33.28

Swp[| 7.50M/2.00G] Uptime: 1 day, 02:35:08

PID USER PRI NI VIRT RES SHR S CPU%▽MEM% TIME+ Command

4720 bcl 20 0 66.2G 5787M 448M S 3201 1.1 840h python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5189 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5198 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5219 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5240 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5246 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5248 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:50 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5259 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5140 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5143 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5155 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5166 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5173 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5179 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:50 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

5183 bcl 20 0 66.2G 5787M 448M R 100. 1.1 26h15:51 python -c import cryosparc_compute.run as run; run.run() --project P2 --job J20 --master_hostname bimsa-SYS-421GE-TNR

F1Help F2Setup F3SearchF4FilterF5Tree F6SortByF7Nice -F8Nice +F9Kill F10Quit

bcl@bimsa-SYS-421GE-TNRT:~$ nvidia-smi -L

GPU 0: NVIDIA H800 PCIe (UUID: GPU-8d55c01a-e897-ba49-47d0-b9dc2439a35b)

GPU 1: NVIDIA H800 PCIe (UUID: GPU-2361418d-0088-744e-341a-e6d6f669f1c0)

GPU 2: NVIDIA H800 PCIe (UUID: GPU-3a965ccf-016e-76a3-7ca3-dbb7ab817e39)

GPU 3: NVIDIA H800 PCIe (UUID: GPU-d7246aa3-6cd5-89ca-cff8-27f5751a2155)

GPU 4: NVIDIA H800 PCIe (UUID: GPU-1c77d065-aa3a-b7cb-97b8-31f14d2e9fca)

GPU 5: NVIDIA H800 PCIe (UUID: GPU-38614431-822e-bf14-9e5b-2f4c1310087d)

GPU 6: NVIDIA H800 PCIe (UUID: GPU-ef3fd2e8-0062-7c2a-329a-1fb08f31f2a3)

GPU 7: NVIDIA H800 PCIe (UUID: GPU-860e9bfc-e017-ae66-2ae2-c0da1f388254)

bcl@bimsa-SYS-421GE-TNRT:~$ free -g

total used free shared buff/cache available

Mem: 503 12 2 0 488 487

Swap: 1 0 1

bcl@bimsa-SYS-421GE-TNRT:~$ cat /sys/kernel/mm/transparent_hugepage/enabled

always [madvise] never

bcl@bimsa-SYS-421GE-TNRT:~$ uname -a

Linux bimsa-SYS-421GE-TNRT 6.2.0-36-generic #37~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Mon Oct 9 15:34:04 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

Please can you try whether the command

sudo sh -c "echo never >/sys/kernel/mm/transparent_hugepage/enabled"

“revives” the job? (background).

Hi, we have updated CryoSPARC to v4.4 and the problem has been solved ![]() Thank you for replying~

Thank you for replying~