There is little public data available from Apollo detectors. Had a GPU free so decided I’d have a quick look and see whether they demonstrated the high late-frame dose weighting (as many with K2/K3 data report) or the lower overweighting I see with our Falcon data. I’ve found one dataset which has “bad” overweighting in the late frames from our Falcon and it’s nowhere near as bad as demonstrated by data reported by others with K2/K3s.

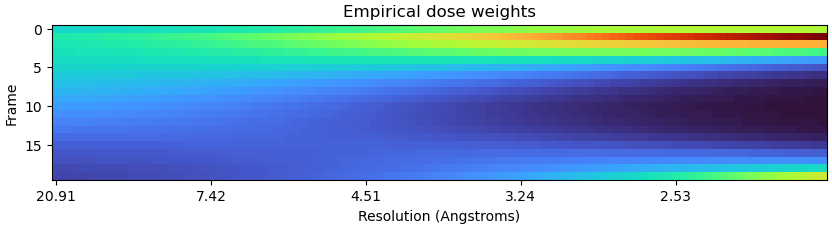

EMPIAR 11261 (the high dose rate dataset) is the only one with RBMC output right now:

Run with defaults, except “extensive” for hyperparameter search. This overweighting is a bit worse than the absolute worst I’ve seen from a Falcon 4, but nothing like that demonstrated by others. I’ll check the lower dose rate data also as time permits.

Wish I knew why sometimes the RBMC plots are short while other times they’re significantly longer. Doesn’t seem frame count related…

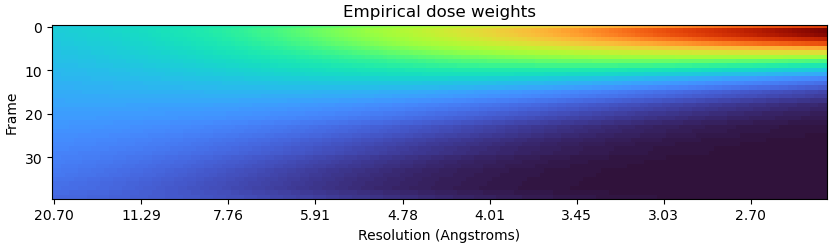

For a ryanodine receptor dataset (2.4MDa particle with a lot of signal) we see very modest overweighting of late frames on K3 compared to what we see for smaller (<200kDa) particles:

So I suspect maybe it is partially a particle size issue?

For this dataset, the refined parameters using “Extensive” search are:

Spatial prior strength: 2.6226e-02

Spatial correlation distance: 18000

Acceleration prior strength: 7.8811e-04

So clearly a correlation distance of 500 is not optimal for all samples.

(In this case global res improved by 0.15Å with RBMC)

Side note - @hsnyder it would be helpful I think to have some per-resolution dose weight vs frame line plots in this output. E.g. dose weight vs frame at 4Å, for example. This would make it a lot easier to judge the shape of the dose weight curve than trying to eyeball the difference between dark blue and light blue on this plot…

In the meantime are the weights written anywhere where they can be replotted with CS tools?

I’ll check a few virus runs; they’re a bit larger (at least in terms of total MW). Don’t have any giant virus (GDa scale) K3 data, but do have some AAV at around 5MDa. Will need to dig through offline backups though.

…

I have a tangentially related question; I’m running RBMC on some focussed refinements and extensive hyperparameter calculation is taking forever (3.5 days so far with no sign of finishing) and as such I’m loathe to repeat hyperparameter calculation for each area. I think dose weights would be safe to re-use (it’s the same dataset after all) and hyperparams should also be safe to re-use (again, same dataset) - it would save a lot of time rather than have to re-run steps 1 and 2 of RBMC another four times. In a brief test run with a smaller dataset, I got the same hyperparams between three different runs, but, much smaller dataset, much smaller particle.

Opinions on just using params and dose weights (if sane) from the first run on the others?

Side note - @hsnyder it would be helpful I think to have some per-resolution dose weight vs frame line plots in this output. E.g. dose weight vs frame at 4Å, for example. This would make it a lot easier to judge the shape of the dose weight curve than trying to eyeball the difference between dark blue and light blue on this plot…

This sounds like a useful idea, thanks @olibclarke I’ll record this.

In the meantime are the weights written anywhere where they can be replotted with CS tools?

Yes. The hyperparameters output group contains a refmotion_doseweights/path column which will tell you what file to load to get the actual dose weights. It’s a simple array with shape (frame, box size).

Opinions on just using params and dose weights (if sane) from the first run on the others?

@rbs_sci, I’d say it’s definitely worth a try. I’d be interested to hear your findings, but in my experience getting the parameters “in the ballpark” often gets you most of the total improvement, and the optimization landscape is somewhat consistent across datasets. There are exceptions to that, but IMO it is worth a try.

Honestly, depending on the SNR, I think there are many cases where the parameter tuning really isn’t that precise/sensitive anyway. There’s definitely some room for an improved model in the future, but with the current one, sometimes frames are just so noisy that once you get “similar-ish”, it’s hard to know which of any two sets of hyperparameters is really “better”.