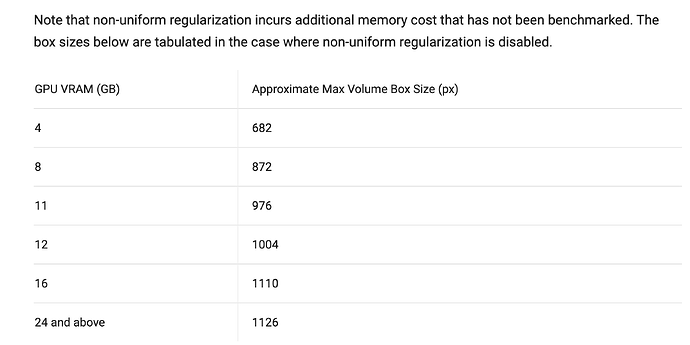

~600-700 pix is the max for me on RTX-3090 or A5000 (all of our workstations are based on 24GB cards) and NVLINK does not help. In general, I have found that Homogeneous reconstruction and Homogeneous Refinement will run with larger box sizes, while NU-refine will not.

3D Flex really only runs at 440 pix, see @hbridges1 post https://discuss.cryosparc.com/t/flex-reconstruction-failing/15016/4?u=mark-a-nakasone.

RELION does things a little differently, but a lot of people see this table https://guide.cryosparc.com/processing-data/tutorials-and-case-studies/performance-metrics and think 1024 pix box size will run fine on a 3090/A5000 - but it will not.

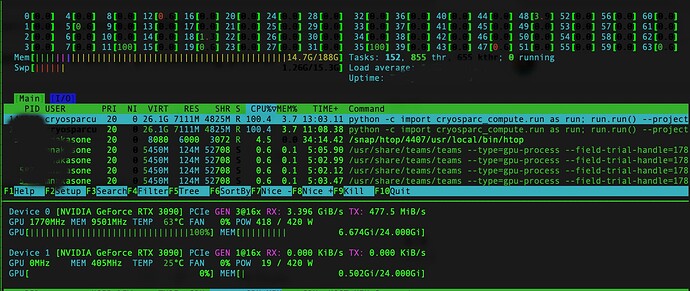

Can use $nvtop or $htop to record the logs of GPU core use, VRAM, and power. I find it helpful to watch some jobs through tmux with nvtop and htop.

![]() some times if the GPUs are not under heavy load you can override the scheduler (run now on a specific GPU 0,1,2,n) in cryosparc and it can save time.

some times if the GPUs are not under heavy load you can override the scheduler (run now on a specific GPU 0,1,2,n) in cryosparc and it can save time.

I am grateful to @rbs_sci, the CryoSparc Team, and the other users that post their experiences. I have witnessed many of my users thinking they had the wrong input, something was wrong with the computer, etc.